In this project, I leveraged SetFit, Hugging Face’s prompt‑free few‑shot classification framework, to tackle the challenging SST‑5 task—fine-grained sentiment analysis across five classes (very negative to very positive)

What makes SetFit so compelling is its efficiency and simplicity:

- Contrastive fine-tuning of a Sentence Transformer backbone on a small set of text pairs.

- Use of the resulting embeddings to train a lightweight classifier head—no expensive prompts or massive payload required.

This project highlights how evolving NLP techniques—from static embeddings to contextual transformers—impact performance and interpretability. Fine-tuned transformer models like DistilBERT offer superior results, but classic approaches like Word2Vec + LSTM remain practical baselines. Blending both perspectives offers a well-rounded approach to real-world sentiment analysis.

- Project Definition

-

Jupyter Notebook

🔍 Overview

Understanding and quantifying sentiment in text has always intrigued me, especially when it allows us to draw actionable insights from subjective opinions. In this project, I explored sentiment classification using both traditional word embeddings and modern transformer models on the SST5 dataset.

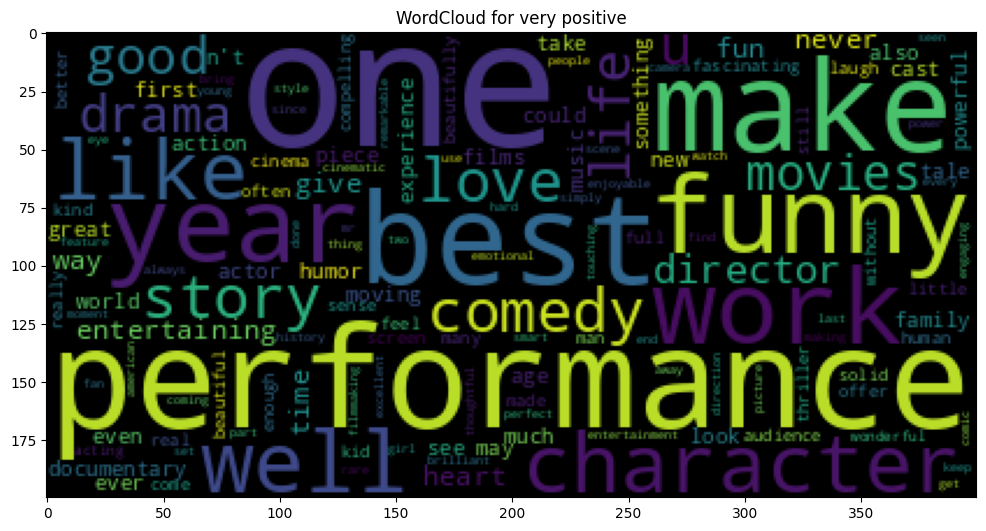

The main goal was to compare the performance and interpretability of Word2Vec with that of DistilBERT for classifying fine-grained sentiment labels across five categories—from very negative to very positive. By analyzing and preprocessing text data, visualizing linguistic patterns, and training deep learning models, I aimed to understand which approach better captures the nuances of sentiment in movie reviews.

🛠️ Tools & Technologies Used

Languages: Python

Libraries & Frameworks:

- Data Processing:

pandas,nltk,scipy,datasets - Visualization:

matplotlib,WordCloud - Traditional NLP:

gensim(Google News Word2Vec) - Deep Learning:

TensorFlow,Keras - Transformers:

transformers(DistilBERT, Hugging Face) - Evaluation:

evaluate,scikit-learn

🌟 Key Features & Deliverables

📊 Data Cleaning & Analysis

- Loaded and analyzed SST5 dataset with 5 sentiment classes.

- Verified absence of missing data and duplicates.

- Created summary stats and visualizations to understand sentiment distribution and vocabulary frequency.

- Used WordClouds and bar plots to identify sentiment-driven linguistic features.

🔎 Feature Engineering

- Preprocessed text using tokenization and stopword removal.

- Transformed data into padded sequences for deep learning compatibility.

- Extracted semantic features using Google’s Word2Vec embeddings.

🤖 Model Implementation

- Word2Vec + LSTM: Built a Bidirectional LSTM model using static pretrained Word2Vec vectors.

- DistilBERT Fine-tuning: Leveraged Hugging Face’s DistilBERT model and fine-tuned it on SST5 using the Transformers library.

- Applied early stopping and tracked validation performance.

🔁 Model Comparison

- Compared both models using evaluation metrics like accuracy and AUC.

- Observed interpretability and training efficiency differences.

- Word2Vec required more preprocessing but was faster and interpretable; DistilBERT was more accurate and robust.

🎯 Key Insights & Business Value

📈 Performance Insights

- DistilBERT consistently outperformed Word2Vec in capturing nuanced sentiment, especially on intermediate classes like “Neutral” and “Very Negative”.

- Word2Vec, while less accurate, offered greater interpretability and was suitable for environments with limited compute.

🧠 Practical Implications

- Transformer-based models are better suited for high-stakes NLP tasks requiring subtle contextual understanding.

- Traditional embedding-based LSTM models can still be valuable when speed and resource constraints matter.

🔄 Scalability & Extension

- The workflow can be extended to other sentiment-rich datasets (e.g., product reviews, social media).

- Both pipelines support plug-and-play extensions with other models or embeddings.